The New Machine Learning Workshop

INTRODUCTION TO THE MATHEMATICS IN MACHINE LEARNING!

If you know zero math and zero machine learning, then this talk is for you. Jeff will do his best to explain fairly hard mathematics to you. If you know a bunch of math and/or a bunch machine learning, then these talks are for you. Jeff tries to spin the ideas in new ways.

Abstract:

Computers can now drive cars and find cancer in x-rays. For better or worse, this will change the world (and the job market). Strangely designing these algorithms is not done by telling the computer what to do or even by understanding what the computer does. The computers learn themselves from lots and lots of data and lots of trial and error. This learning process is more analogous to how brains evolved over billions of years of learning. The machine itself is a neural network which models both the brain and silicon and-or-not circuits, both of which are great for computing. The only difference with neural networks is that what they compute is determined by weights and small changes in these weights give you small changes in the result of the computation. The process for finding an optimal setting of these weights is analogous to finding the bottom of a valley. “Gradient Decent” achieves this by using the local slope of the hill (derivatives) to direct the travel down the hill, i.e. small changes to the weights.

The new Machine Learning workshop series starts this week:

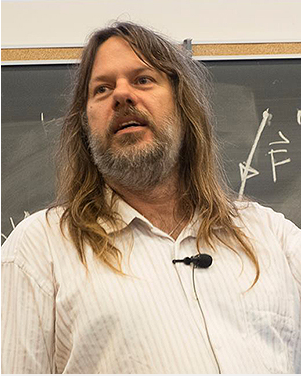

Jeff Edmonds has been a computer science professor at York since 1995,

after getting his bachelors at Waterloo and his Ph.D. at University of Toronto. His back ground is theoretical computer science. His passion is explaining fairly hard mathematics to novices. He has never done anything practical in machine learning, but he is eager to help you understand his musings about the topic.

https://www.eecs.yorku.ca/~jeff/